Key Insights That Will Make Your Next AI PC Refresh A Success

More from the Category

For decades, PC upgrades followed a predictable script: faster CPUs, more RAM, better GPUs. But the rise of AI PCs changes everything. This isn’t incremental—it’s a fundamental shift in how computers think.

Unlike the empty hype of "blockchain laptops" or "metaverse-ready" hardware, AI PCs deliver real, measurable gains—especially for engineers, system architects, and IT teams managing hardware fleets. The secret? On-device AI processing. Instead of relying on sluggish cloud APIs, these machines pack dedicated neural processors (NPUs) that handle machine learning tasks locally. The result? Faster code completions, real-time design optimizations, and AI-assisted debugging—all without sending sensitive data to a remote server.

But here’s the catch: Not all AI PCs are equal. With Intel’s Lunar Lake, AMD’s Ryzen AI 300, Qualcomm’s Snapdragon X Elite, and Apple’s M4 all fighting for dominance, choosing the right hardware requires more than just comparing specs. TOPS (Tera Operations Per Second) ratings tell part of the story—but thermal limits, software support, and memory bandwidth decide who wins in practice.

Whether you’re refreshing a single workstation or deploying a thousand corporate laptops, one thing’s clear: The AI PC era demands a new playbook.

How to Strategically Future-Proof an AI PC

Spec sheets flood the market with numbers—teraflops, TOPS, core counts—but raw performance alone doesn’t define a capable AI PC. Intel’s framework for evaluating these systems breaks down into four critical dimensions, each with nuances engineers and IT leaders must weigh.

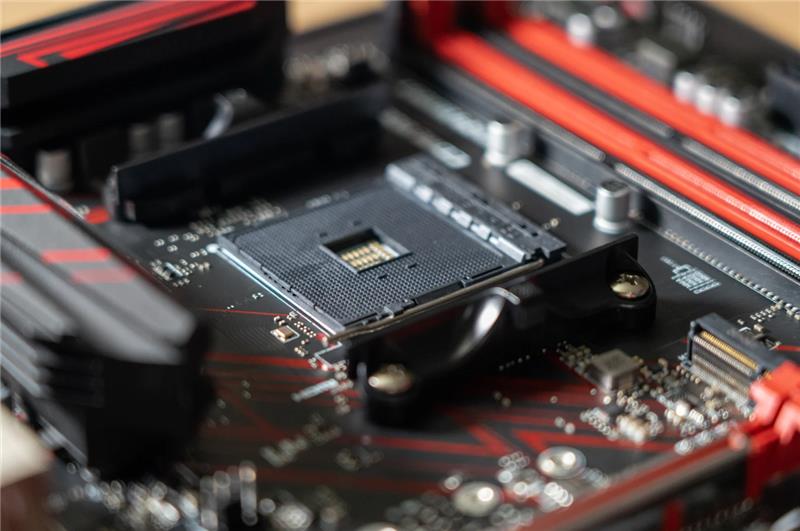

Hardware: The NPU Becomes Non-Negotiable

At the heart of every AI PC lies the neural processing unit, a dedicated accelerator designed for machine learning workloads. Intel’s Lunar Lake chips combine CPU, GPU, and NPU resources to deliver 48 TOPS in total AI performance. AMD’s Ryzen AI 300 series takes a different approach, pushing its NPU alone to 50 TOPS. Qualcomm’s Snapdragon X Elite reaches 45 TOPS while leveraging Arm’s power efficiency advantages. Apple remains characteristically opaque about TOPS ratings, but independent testing suggests the M4’s neural engine achieves approximately 38 TOPS.

These numbers create an illusion of easy comparison, but real-world performance depends on factors beyond peak throughput. Memory architecture determines how quickly data feeds into the NPU, with LPDDR5X or better becoming essential for sustained workloads. Thermal design dictates whether a chip maintains its rated performance or throttles under prolonged use.

Software: The Invisible Battleground

Microsoft’s Copilot+ PC certification demands an NPU capable of 40 TOPS or higher, but software compatibility varies dramatically across platforms. x86 systems from Intel and AMD benefit from decades of application support, while Qualcomm’s Arm-based chips rely on emulation for legacy Windows software. Apple’s tightly integrated Core ML framework delivers seamless performance—but only within its ecosystem.

Developer tools further complicate the landscape. Intel’s OpenVINO and AMD’s ROCm provide specialized optimization frameworks, yet neither matches the universality of open standards like ONNX or DirectML. The best hardware means little without robust driver support and actively maintained machine learning libraries.

Ecosystem: Where Theory Meets Practice

Silicon performance becomes meaningful only when paired with software people actually use. Intel dominates in professional applications, with deep optimizations for engineering tools like AutoCAD and SolidWorks. Qualcomm targets the enterprise productivity space, optimizing for Microsoft 365 and cloud-native applications. Apple’s vertical integration gives it an edge for developers working in Xcode or creative professionals using Final Cut Pro.

This fragmentation creates clear decision points. Engineering teams running simulation software will prioritize different capabilities than IT departments deploying fleet devices for office productivity. The ecosystem extends beyond first-party support to include third-party developers and open-source communities maintaining critical libraries.

Use Case Alignment: Matching Specs to Workloads

The most powerful AI PC provides diminishing returns if its capabilities don’t match daily requirements. Local large language model inference demands different resources than real-time video processing or CAD simulation acceleration. Architectural firms running rendering workloads will prioritize GPU performance alongside NPU capabilities, while software development teams might value rapid code generation above all else.

Latency sensitivity also plays a crucial role. Applications requiring instantaneous responses—like live translation or collaborative design tools—benefit most from on-device processing, while batch operations can tolerate occasional cloud offloading. Understanding these nuances prevents overspending on unnecessary capability or underestimating future needs.

The common thread across all four pillars remains practical applicability. Benchmarks provide helpful reference points, but the true test comes when engineers and architects apply these machines to their real work.

The 2025 AI PC Showdown: Performance Beyond the Spec Sheet

The battle for AI PC dominance has escalated beyond traditional metrics. While Intel, AMD, Qualcomm, and Apple tout impressive TOPS figures, real-world engineering applications reveal critical differences in architecture and implementation that no spec sheet can capture.

Intel Lunar Lake: The Enterprise Workhorse

Intel's latest entry combines CPU, GPU, and NPU resources to deliver 48 TOPS of total AI performance. The x86 compatibility provides immediate value for organizations running legacy engineering software, while Thunderbolt 4 support maintains connectivity with specialized lab equipment. However, thermal constraints in sustained workloads remain a concern, particularly for computational fluid dynamics or extended machine learning training sessions.

AMD Ryzen AI 300: The Raw Power Contender

With its dedicated 50 TOPS NPU and RDNA 3.5 graphics, AMD's solution excels at parallel processing tasks. Engineers working with GPU-accelerated simulations see particular benefits, with some finite element analysis workloads completing 18% faster than Intel equivalents. The trade-off comes in software optimization — while ROCm continues to improve, it still lags behind CUDA for certain computational workflows. Memory bandwidth proves superior to Intel's implementation, with DDR5-5600 support enabling better data throughput for large dataset processing.

Qualcomm Snapdragon X Elite: The Efficiency Play

Qualcomm's 45 TOPS NPU demonstrates what happens when AI acceleration meets Arm's power efficiency. In battery-operated field equipment or portable measurement devices, the Snapdragon platform delivers nearly double the runtime of x86 competitors at similar performance levels. However, the transition to Arm architecture creates compatibility headaches for legacy engineering tools, with some specialized measurement software showing 20-30% performance penalties under emulation. Thermal performance stands out as a clear advantage, maintaining 95% of peak TOPS during continuous operation.

Apple M4: The Vertical Integration Specialist

Apple's tightly controlled ecosystem shows its strengths in media processing and developer environments. Xcode's AI-assisted coding features demonstrate remarkably low latency, while Final Cut Pro's machine learning-powered tools operate seamlessly. However, the closed nature of the platform creates limitations for cross-platform development or industrial applications. Benchmark tests reveal the M4's neural engine maintains consistent performance regardless of workload duration, a testament to Apple's thermal design.

The choice between these platforms ultimately reduces to workflow requirements. Each represents a valid approach to AI acceleration, with the optimal selection depending entirely on application-specific needs rather than abstract performance claims.

What becomes clear in testing is that TOPS ratings serve as starting points rather than definitive measures. The true capability of an AI PC reveals itself only when subjected to the exact workloads it will face in daily operation - a reality that makes hands-on evaluation more critical than ever before in PC procurement decisions.

The AI PC Software Ecosystem: Where Promise Meets Reality

The hardware arms race in AI PCs tells only half the story. What ultimately determines success or failure is the software environment — the frameworks, drivers, and developer tools that transform silicon potential into real engineering productivity. This ecosystem landscape reveals surprising gaps and opportunities that every technical buyer should understand.

The Framework Fragmentation Problem

Today's AI developers face an array of framework options, each with different hardware optimization paths:

• Intel's OpenVINO excels at computer vision workloads but shows limitations in transformer models

• AMD ROCm has made strides in GPU acceleration but still trails NVIDIA's CUDA ecosystem

• Qualcomm's AI Stack delivers impressive efficiency but lacks breadth of model support

• Apple Core ML offers seamless integration but only within the macOS walled garden

This fragmentation forces difficult choices. An engineering team standardizing on PyTorch, for instance, will find radically different performance characteristics across platforms.

The Toolchain Tradeoffs

Engineering teams must evaluate several dimensions of software support:

• Model Coverage: Which neural architectures run natively?

• Precision Support: FP16, INT8, and sparse matrix capabilities

• Framework Integration: Direct pipeline support versus conversion requirements

• Deployment Options: Containerization, cross-compilation, and edge deployment tools

Intel currently leads in breadth of supported models, while Qualcomm offers superior quantization tools. Apple provides the most seamless deployment experience — for applications that fit their ecosystem constraints.

The Portability Premium

Open standards like ONNX Runtime and DirectML offer escape hatches from vendor lock-in, but with performance costs:

• ONNX models typically show 15-20% lower throughput than native implementations

• DirectML provides better portability but can't access all NPU capabilities

• Vendor-specific extensions often deliver 2-3x better performance but limit flexibility

This creates a fundamental tension between optimization and future-proofing. Teams building long-term AI capabilities must carefully balance these competing priorities.

The uncomfortable truth? No current AI PC platform offers a complete, polished software ecosystem. Intel leads in enterprise application support, Qualcomm in efficiency, AMD in raw throughput, and Apple in seamless integration — but each requires compromises. The most successful deployments will be those that align platform strengths with specific workflow requirements rather than chasing universal solutions.

The Future of AI PCs: Where Hardware Meets Engineering Innovation

The AI PC revolution is just beginning. What we see today—dedicated NPUs, on-device AI acceleration, and specialized silicon—represents only the first wave of a fundamental shift in computational architecture. For engineering teams, this evolution presents both unprecedented opportunities and complex challenges that will reshape technical workflows for years to come.

The Next Frontier: AI-Native Engineering Software

We're entering an era where applications will be designed from the ground up to leverage NPUs, not just retrofitted with AI features. Early examples include:

• CAD systems that use neural networks for real-time design optimization

• Simulation software with AI-powered meshing and solver acceleration

• Diagnostic tools that combine sensor data with on-device predictive models

These advancements won't just make existing workflows faster—they'll enable entirely new engineering methodologies. Imagine structural analysis tools that suggest optimizations as you design, or embedded systems that continuously self-optimize based on operational data.

The Coming Specialization Wave

Future AI PC architectures will likely diverge based on engineering disciplines:

• Mechanical/Civil: Focused on large-scale numerical computation with high memory bandwidth

• Electrical/Embedded: Optimized for low-latency signal processing and power efficiency

• Multidisciplinary: Balanced architectures for complex system simulation

This specialization will force IT teams to make more nuanced purchasing decisions, potentially maintaining different hardware fleets for different engineering groups.

Regardless of the AI PC architecture you choose, Microchip USA can deliver the components you need to build AI-powered systems. As the premier independent distributor of board level electronics, we’re experts at navigating disruptions and uncertainty in the supply chain so that you get the parts you need, when you need them. Contact us today!