SDRAM vs DRAM Memory

More from the Category

When it comes to computing performance, memory speed and architecture make all the difference. From gaming rigs to industrial control systems, how quickly data moves between memory and processor directly impacts responsiveness and stability.

Two fundamental types of memory - DRAM and SDRAM - lie at the heart of this performance equation. Although they share a common foundation, their operation and efficiency differ in key ways. In this guide, we’ll explore SDRAM vs DRAM memory, including how each works, where they’re used, and why SDRAM became the standard for modern computing.

What Is DRAM Memory?

Dynamic Random Access Memory (DRAM) is one of the earliest and most widespread types of computer memory. It stores each bit of data in a tiny capacitor that must be constantly refreshed to retain information, hence the term - dynamic.

How it Works

DRAM operates asynchronously, meaning it doesn’t synchronize with the CPU clock. This makes it simpler and cheaper to produce but also limits data transfer speed and efficiency.

Advantages

· Straightforward design

· Low production cost

· High density for its era

Drawbacks

· Slower performance due to lack of synchronization

· Requires frequent refresh cycles

· Not ideal for high-speed or multitasking environments

Common Uses

DRAM was widely used in early PCs, embedded systems, and legacy industrial devices where cost mattered more than performance.

What Is SDRAM Memory?

Synchronous Dynamic Random Access Memory (SDRAM) revolutionized how memory interacts with processors. Unlike DRAM, it synchronizes with the system clock, allowing the CPU to anticipate data readiness instead of waiting unpredictably.

Key Innovation

SDRAM introduced pipelining, enabling multiple data requests to overlap and execute faster, dramatically improving throughput and reducing latency.

Evolution

SDRAM set the stage for the entire DDR (Double Data Rate) family - DDR, DDR2, DDR3, DDR4, and today’s DDR5 each delivering higher bandwidth and energy efficiency.

Benefits

· Synchronization with CPU clock for faster, predictable performance

· Reduced latency through pipelining

· Foundation for modern DDR generations

· Ideal for high-performance computing environments

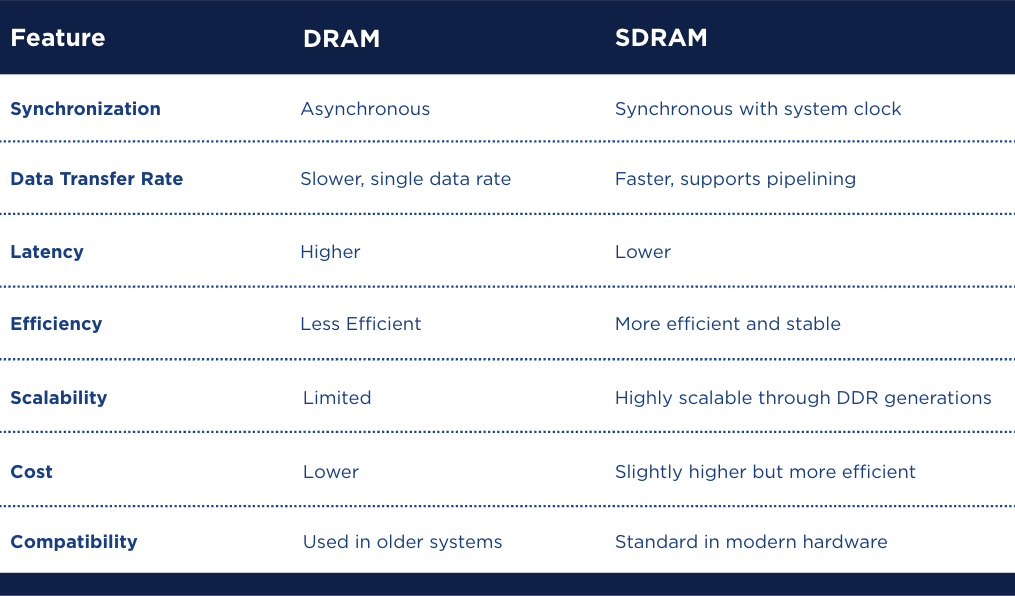

SDRAM vs DRAM Memory: Key Differences

In short, SDRAM outperforms DRAM in nearly every category except cost, which is why it dominates modern computing markets.

Applications of SDRAM and DRAM Memory

When it comes to real-world use, SDRAM and DRAM serve very different purposes in modern electronics. While SDRAM powers today’s high-speed computing and connected devices, traditional DRAM continues to find value in simpler, legacy, and cost-sensitive applications.

SDRAM Applications

· Modern computers, laptops, and gaming consoles

· Data centers and networking equipment

· Industrial automation systems and IoT devices using DDR-based SDRAM

· AI and edge computing systems requiring high throughput

DRAM Applications

· Legacy industrial controllers and cost-sensitive embedded systems

· Development boards or training kits where simplicity is preferred

· Low-speed electronics where performance demands are minimal

In today’s electronics manufacturing, synchronization gives SDRAM a clear advantage, allowing real-time systems to achieve stable, predictable data flow.

The Current Market for SDRAM vs DRAM Memory

SDRAM and its DDR successors dominate global memory demand, while traditional DRAM has become a niche product used primarily for maintenance and repair of older equipment.

Trends

· DDR4 and DDR5 continue to expand in server, AI, and edge applications.

· The classic DRAM market is shrinking as manufacturers phase out older lines.

· Emerging technologies like LPDDR and HBM are pushing efficiency even further.

Pricing & Supply Chain Insights

Global chip supply fluctuations still impact pricing. Manufacturers such as Samsung, SK Hynix, and Micron lead SDRAM and DDR production, while independent distributors like Microchip USA bridge supply gaps - offering access to both modern and legacy memory components.

Future Outlook

Next-gen standards like DDR6 and hybrid memory models promise even greater performance, supporting AI, autonomous systems, and high-bandwidth 3D architectures.

The Evolution of Memory Technology

From early DRAM to today’s synchronized SDRAM and DDR modules, memory design has evolved rapidly:

· Bandwidth has increased by orders of magnitude.

· Latency has dropped to meet real-time data processing needs.

· Power consumption has improved thanks to LPDDR and 3D stacking.

Emerging innovations like HBM (High Bandwidth Memory) and LPDDR6 are setting new standards for efficiency and compact performance.

Learn More: Ultimate Guide to High Bandwidth Memory

Which One Is Right for You?

Choosing between DRAM and SDRAM depends on your system requirements:

For performance-focused systems (servers, PCs, embedded AI): SDRAM / DDR

For legacy or low-cost designs: Traditional DRAM

For most modern devices, SDRAM-based solutions are the clear standard due to their synchronization, scalability, and compatibility with advanced processors.

Memory Performance and Sourcing Solutions

SDRAM changed computing by introducing synchronization - a feature that made data processing faster, more predictable, and more efficient. As DDR5 and DDR6 take over next-generation devices, SDRAM remains the backbone of global computing architecture.

At Microchip USA, we provide:

· SDRAM, DDR, DRAM, and other memory components

· Fast quotes and global support

· Obsolete part alternatives for legacy hardware

Find the right memory solution for your design.

FAQs

What’s the difference between SDRAM and DRAM memory?

SDRAM is synchronized with the CPU clock, allowing faster and more efficient data transfers than asynchronous DRAM.

Is SDRAM compatible with DDR memory types?

Yes. DDR (DDR, DDR2, DDR3, DDR4, DDR5) is an evolution of SDRAM technology.

Why is SDRAM faster than DRAM?

It synchronizes with the processor’s clock and supports pipelined operations, reducing latency.

Are DRAM components still being manufactured?

Yes, but production is limited to specific industrial and legacy-use cases.

Learn More: The Global Memory Chip Shortage – DRAM, DDR4, NAND Flash and HBM Memory

Which memory is more power-efficient?

SDRAM and its DDR variants are more efficient due to synchronized operation and advanced power management features.